Not a Candidate. A Deepfake. Disinformation, Digital Resilience, and New Work Rules in the AI Era

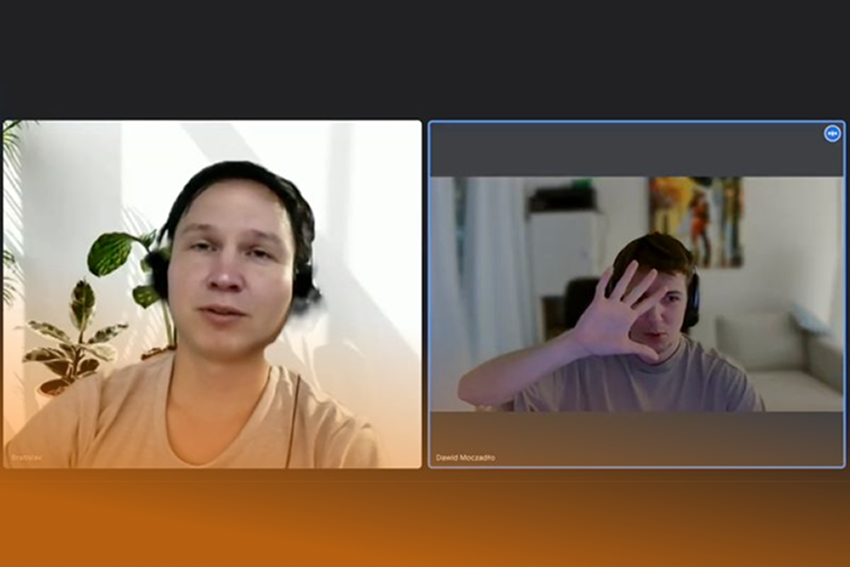

Imagine you’re conducting a job interview. The candidate looks professional, speaks convincingly, and knows the technical details of the position. But… they don’t exist. Why does this matter at work?

This isn’t science fiction. In 2024, a tech company was deceived by a candidate who participated in a remote interview as a deepfake—a synthetic avatar created using AI. The entire recruitment process involved a fictitious person. The case was reported by The Pragmatic Engineer in the article AI Fakers.

In the era of remote work and global hiring processes, deepfakes in recruitment are no longer an exception—they are a growing threat.

In the workplace:

- Deepfakes can lead to reputation sabotage, financial fraud, and manipulation of decisions.

- Fear of digital manipulation reduces trust in communication systems.

- Teams’ ability to act effectively in crises is diminished without clear, reliable information.

📊 Why should managers and HR stay alert?

- According to Du et al., 2025, 62% of employees cannot reliably distinguish a deepfake from a real person in remote interactions.

- In 2023, 1 in 4 U.S. companies reported AI-related recruitment fraud—mainly in the IT sector (Williamson & Prybutok, 2024).

- Research by Gupta et al., 2025 shows that increasing fear of AI manipulation affects HR decision quality and raises “technostress” among teams.

🔐 How to protect against recruitment deepfakes:

1. Change communication channels

Don’t rely on a single video call. Include live technical tests, a short phone conversation, or a voice recording describing a project.

2. Observe microexpressions

Deepfakes often “don’t blink” or fail to show natural facial expressions. Watch for artificial smiles, static backgrounds, or perfect lighting. If something seems “too perfect,” investigate further.

3. Analyze metadata and documentation

Check that CVs align with LinkedIn, GitHub, or industry records. Compare profile creation dates, activity, and timeline consistency.

4. Set HR security standards

Implement an internal checklist for managers and recruiters with warning signals, such as:

- No social media history

- Inappropriate responses to situational questions

- Audio lag relative to video

🧭 It’s not just technology—it’s trust that can be broken

Deepfakes strike at the core of hiring processes: trust in people and data. In a world where the first contact with a candidate is a screen, managers and HR teams must operate with higher cognitive awareness than ever before.

🎓 Want to learn more about digital resilience?

Join the module “Digital Resilience and Critical Thinking in the AI Era.”

You will learn:

- How to identify falsehoods in digital communication

- How to avoid cognitive biases

- How to build cognitive and emotional resilience in information-rich, stimulus-heavy, and uncertain work environments

- How to collaborate consciously with AI instead of fearing it

👉 If you recruit, manage, or make decisions—sign up now.

Trust is not naivety. But it must be conscious.

📚 Sources and Research:

- Du, J. et al. (2025). The issues caused by misinformation—How workers and organizations deal with it. JASIST.

- Williamson, S.M., & Prybutok, V. (2024). The era of artificial intelligence deception. MDPI Information.

- Gupta, N. et al. (2025). Teaching Students Essential Survival Skills in the Age of Generative AI. ASEE Conference.

- Shalaby, A. (2024). Cognitive AI hazards. Springer.

- Hameed, R.M. et al. (2024). Deepfake advertising disclosure and trust. ResearchGate.

- Shin, D. (2024). Artificial misinformation: Trust in the AI era. Springer.

🔔 Sign up before the next deepfake ruins your workday.

This module will be led by Dr. Barbara Zych and a Special Guest!